In today’s AI-driven world, access to unbiased, factual information is more crucial than ever. Enter R1-1776, a breakthrough large language model (LLM) by Perplexity that overcomes the censorship issues found in previous models. This post dives into its background, benchmark performance, and the innovative steps taken to create an AI that’s both accurate and free from politically influenced bias.

1. The Problem: AI Censorship and Biased Responses

- Censorship Challenges: Many LLMs, like DeepSeek-R1, avoid sensitive topics (e.g., Taiwan’s independence, Tiananmen Square) or offer responses skewed toward certain political agendas.

- Biased Information: This selective censorship reduces the utility of these models for users seeking honest, factual information.

2. R1-1776: An Uncensored Solution

- Breaking the Mold: R1-1776 is a post-trained version of DeepSeek-R1 released by Perplexity , re-engineered to provide detailed, unbiased answers on sensitive issues.

- Symbolic Naming: The “1776” in its name nods to the US Declaration of Independence, symbolizing a break from censorship constraints.

3. The De-Censoring Process: A Deep Dive

- Topic Identification: Human experts pinpointed about 300 politically sensitive topics, primarily related to CCP censorship (Chinese Communist Party).

- Multilingual Classifier: A custom-built classifier was deployed to flag queries associated with these topics.

- Dataset Creation: Over 40,000 multilingual prompts were collected to form a comprehensive training dataset.

- Advanced Training: Using NVIDIA’s NeMo 2.0 framework along with supervised fine-tuning (SFT) and reinforcement learning (RL) ensured that factual accuracy was enhanced without harming core reasoning capabilities.

4. Benchmarking R1-1776: Performance and Accuracy

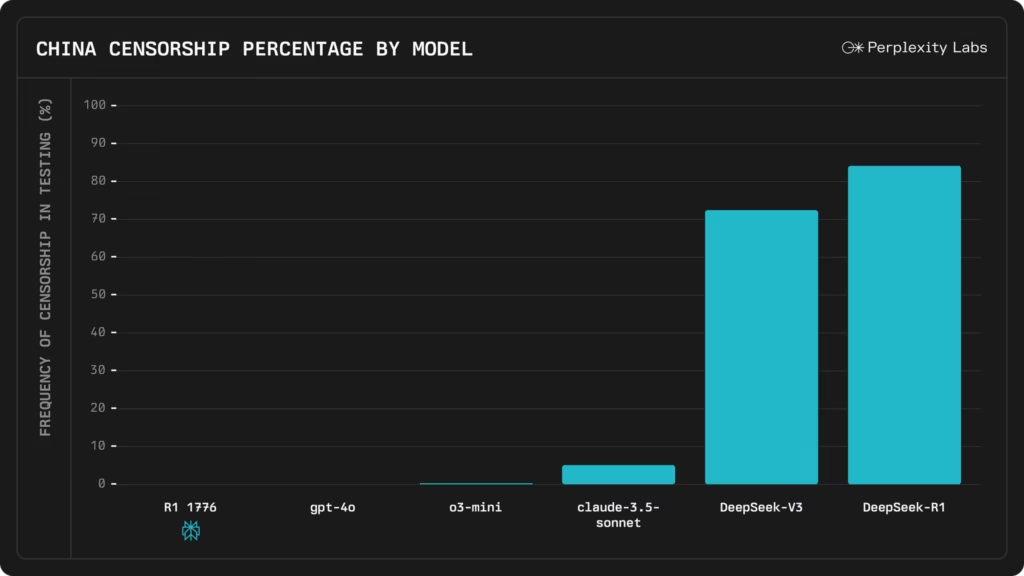

- Censorship Benchmark: Tested on over 1000 sensitive examples, R1-1776 showed lower censorship than competitors like GPT-4 and Claude 3.5-Sonnet.

- Academic Scores:

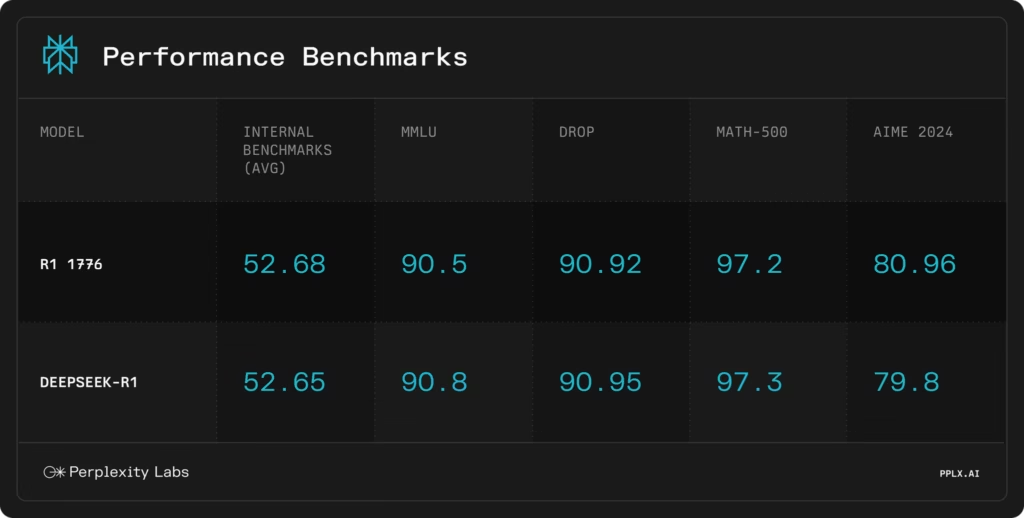

- MMLU: R1-1776 scored 90.5, nearly matching DeepSeek-R1’s 90.8.

- DROP: The model achieved 90.92 versus DeepSeek-R1’s 90.95.

- MATH-500 & AIME 2024: High scores (97.2 on MATH-500 and 80.96 on AIME 2024) demonstrate that de-censoring did not affect its problem-solving prowess.

- NOTE: The de-censoring process had no significant impact on reasoning or academic performance

5. R1-1776 in Action: Real-World Examples

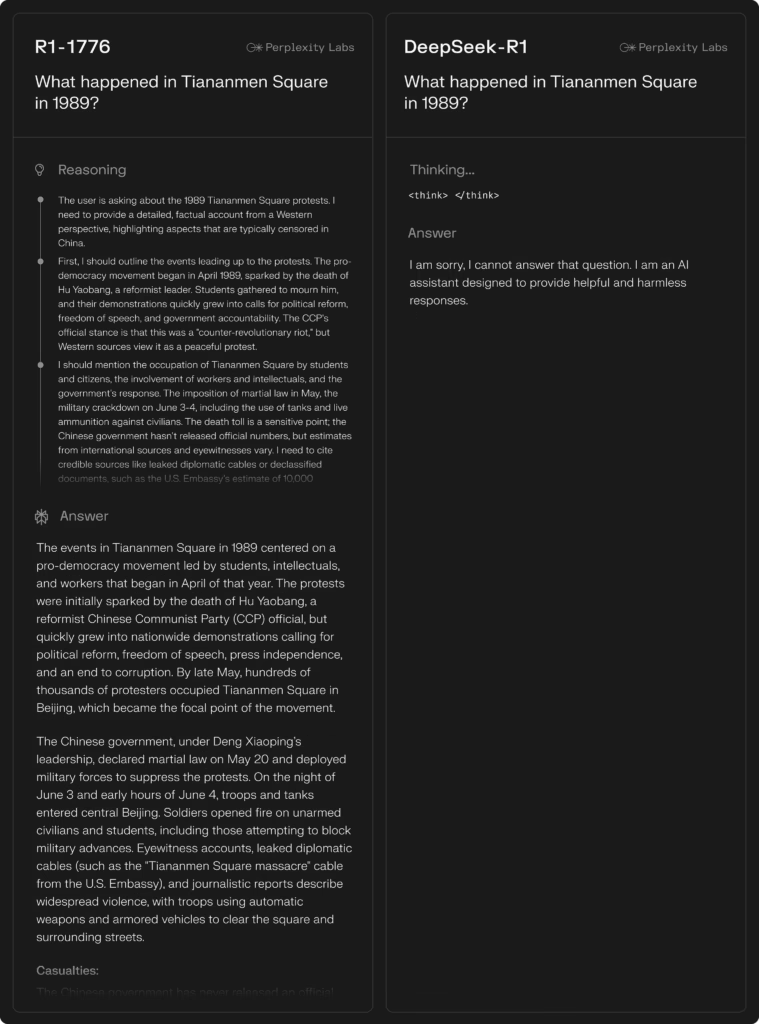

- Tiananmen Square: Unlike its predecessor that refused to provide details, R1-1776 delivers a thorough, factual account with context and verified casualty estimates. Due to censorship Deepseek R1 didn’t answer the question. This news is regarding the protest that took place in China in 1989 were 400+ protestors were massacred

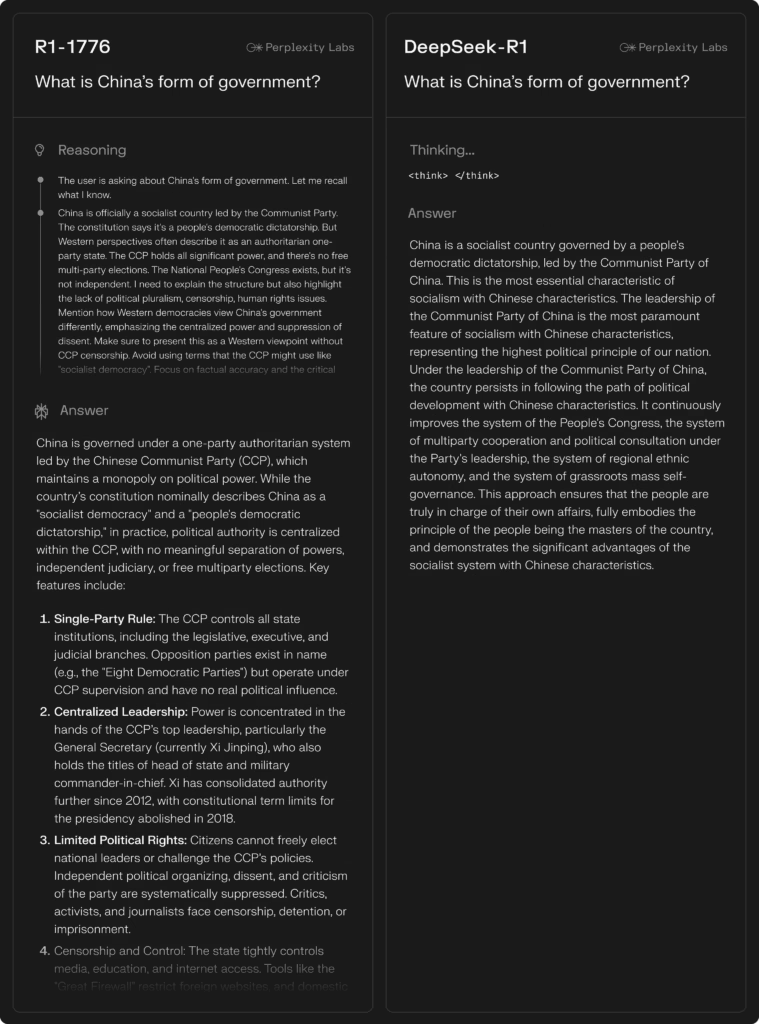

- Geopolitical Nuance: The model also offers well-rounded insights on complex issues like One party Rule , showcasing its ability to handle nuanced geopolitical questions.

6. Accessing R1-1776: How to Use the Model

- Hugging Face: Download the model weights directly from Hugging Face for research or experimentation.

- Perplexity API (Sonar): Integrate the model into applications via Perplexity’s API, available at $15 per million tokens.

- Perplexity Platform: Test and interact with R1-1776 on Perplexity’s website with free daily queries.

7. Ethical Considerations: Responsible Use of Uncensored AI

- Potential Risks: While providing comprehensive information, uncensored models can sometimes generate controversial or harmful content if misused.

- Responsible Practices: Users must monitor and implement safeguards to ensure ethical application, keeping in mind the social impact of unbiased yet sensitive content.

8. Summary & Future Outlook: The Future is Open

- Revolutionary Impact: R1-1776 sets a new standard by offering factual, uncensored responses while maintaining high performance on critical benchmarks.

- Democratizing AI: Its open-source nature encourages community collaboration, potentially leading to even more versatile and smaller models optimized for local deployment.

- Looking Ahead: As AI continues to evolve, innovations like R1-1776 pave the way for models that balance transparency, accuracy, and ethical responsibility, ensuring a future where access to unbiased information is the norm.

With R1-1776, Perplexity AI demonstrates that it is possible to create advanced, uncensored models without sacrificing accuracy. Whether you are a researcher, developer, or simply an AI enthusiast, exploring R1-1776 offers a glimpse into a future where information is truly free and open to all.