In the fast-paced world of artificial intelligence (AI), Large Language Models (LLMs) have redefined how we interact with technology by producing human-like text. Yet, one major hurdle has held them back: their disconnect from real-time data and external tools. Enter the Model Context Protocol (MCP)—a revolutionary solution developed by Anthropic and open-sourced in November 2024. MCP bridges this gap, empowering AI systems with seamless access to the real world. This blog explores everything you need to know about MCP servers, from their architecture to their real-world applications, optimized for both readers and search engines.

Table of Contents

Toggle1. What is MCP ?

2. The Technical Architecture of MCP Servers

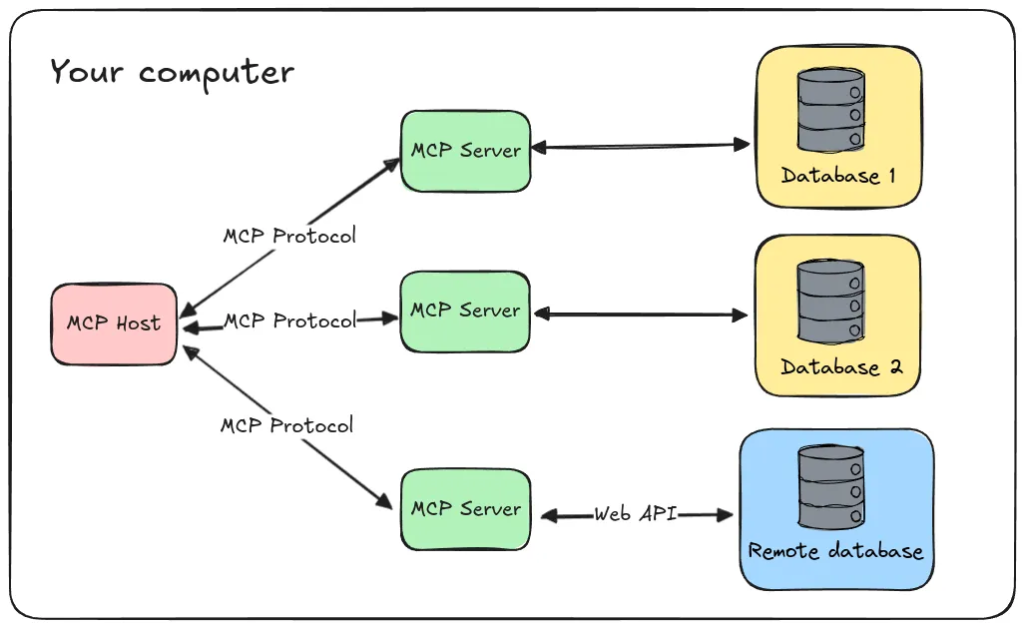

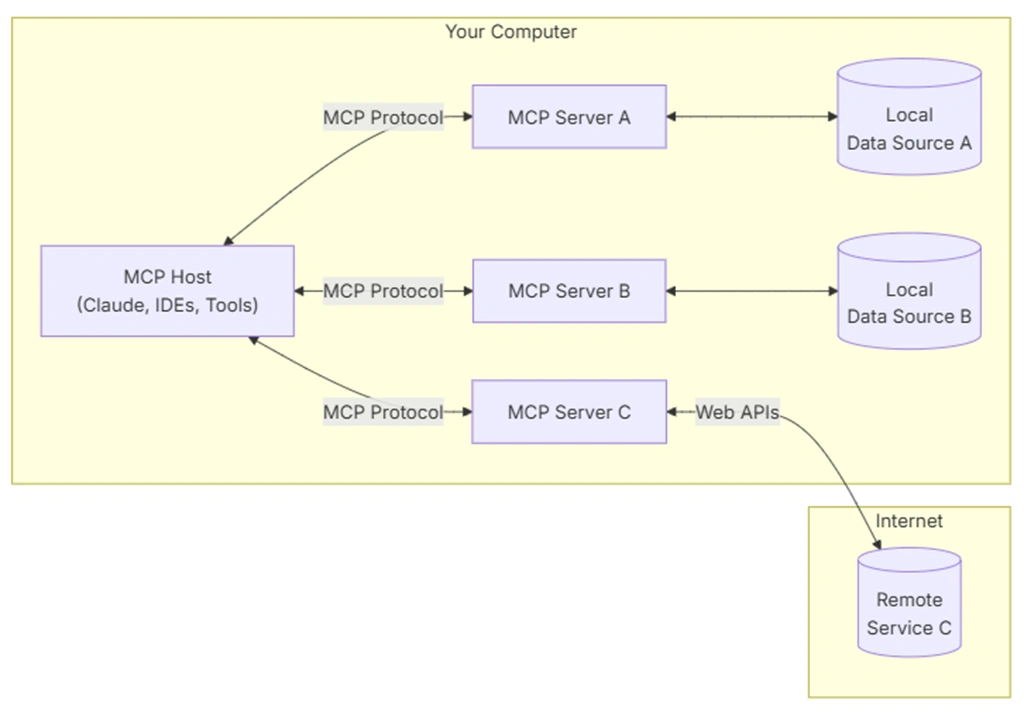

MCP follows a client-server architecture with several core components that work together to enable AI systems to interact with external data and tools. The main components in this architecture include:

- Host Application: LLMs that interact with users and initiate connections, such as Claude Desktop, AI-enhanced IDEs like Cursor, and standard web-based LLM chat interfaces.

- MCP Client: Integrated within the host application to handle connections with MCP servers, translating between the host’s requirements and the Model Context Protocol.

- MCP Server: Programs that expose specific functionalities that allow access to files, databases, and APIs. Each standalone server typically focuses on a specific integration point, like GitHub for repository access or PostgreSQL for database operations.

- Transport Layer: The communication mechanism between clients and servers. MCP supports two primary transport methods:

- STDIO (Standard Input/Output): Used mainly for local integrations where the server runs in the same environment as the client.

- HTTP+SSE (Server-Sent Events): Used for remote connections, with HTTP for client requests and SSE for server responses and streaming.

All communication in MCP uses JSON-RPC 2.0 as the underlying message standard, providing a standardized structure for requests, responses, and notifications.

3. How MCP Servers Work: Operational Mechanisms

Ever wondered how MCP servers deliver real-time data to AI? Here’s the process in action:

- Protocol Handshake:

- The MCP client connects to available servers.

- It queries each server’s capabilities (e.g., tools, resources).

- The client registers these for use by the AI.

- Request Processing:

- The AI detects a need for external data based on a user’s query.

- It selects an appropriate MCP capability.

- The user is prompted to approve access to the external resource.

- Data Exchange:

- The client sends a standardized request to the MCP server.

- The server fetches data (e.g., from an API or database) and returns it.

- The AI integrates this data into its response, delivering accurate, up-to-date answers.

This seamless flow happens in seconds, making AI interactions feel intuitive and informed.

4. Key Components of MCP Servers

MCP servers provide three main types of capabilities or “primitives”:

- Resources: File-like data that can be read by clients, such as API responses or file contents. Resources offer a consistent way to access read-only data, like files, database schemas, or Git histories, giving the AI context.

- Tools: Functions that can be called by the LLM (with user approval). Each tool includes a name, a description in natural language, a schema defining what information it needs, and the actual code that makes the API calls. When an AI wants to use a tool, the MCP server tells the AI what tools are available, the AI selects a tool, and the server specifies what information it needs.

- Prompts: Pre-written templates that help users accomplish specific tasks. These are predefined templates that guide the AI’s interactions, making it easier to handle repetitive tasks.

What’s revolutionary about MCP servers is that they allow the AI to discover these tools and resources dynamically. Unlike traditional setups where tools are hardcoded into the AI, MCP servers let the AI ask, “What can you do?” and adapt on the fly

5. Getting Started with MCP Servers

For those interested in implementing MCP, the first step is setting up an MCP server and connecting it to relevant data sources. Various SDKs and tools are available to facilitate integration with languages such as Python, JavaScript, and TypeScript. Here’s a basic example of implementing an MCP server:

- Install the required packages:

# For Python

pip install mcp-python-sdk

- Create a simple MCP server

# Example for a weather MCP server

from mcp import FastMCP

app = FastMCP()

@app.tool()

def get_forecast(location: str):

“””Get the weather forecast for a given location.”””

# Implementation goes here

return {“forecast”: “sunny”, “temperature”: 75}

if __name__ == “__main__”:

app.run()

- Configure an MCP client (like Claude Desktop) to use this server:

{

“mcpServers”: {

“weather”: {

“command”: “python”,

“args”: [“path/to/your/weather_server.py”]

}

}

}

6. Benefits of Using MCP Servers

- Real-Time Data: Gets fresh information directly when needed, no outdated answers

- Better Security: Keeps passwords and sensitive data isolated and protected

- Cost Savings: Uses less computing power than alternative methods

- Works Everywhere: Connects with any AI model and grows as your needs grow

- Common Language: Helps different tools talk to each other easily

- Better Understanding: AI gives more accurate answers using up-to-date information

- Two-Way Talking: AI can both get information and make things happen

- Easier Building: Developers don’t need to create custom connections for each tool

- Simpler Setup: Reduces connection complexity dramatically

- Smarter AI: Responses are more accurate because information is current

7. Challenges and Limitations of MCP Servers

- Needs Tech Skills: Hard for non-technical people to set up and use

- Location Limits: Most servers must run on your local machine

- Weak Access Control: Lacks good permission systems for different users

- Tool Overload: Gets confusing when using lots of tools at once

- One-Company Control: Anthropic makes all decisions despite being “open”

- Constant Fixes Needed: Updates to tools can break connections

- No Task Chains: Missing built-in way to run multiple tools in order

- Hard to Fix Problems: Difficult to identify why things aren’t working

- Finding Tools is Hard: No central place to discover available servers

- Market Split Risk: Could lead to competing systems from other companies

8. Real-World Examples and Use Cases

Business Tools

Google Drive: Find and use your files

Slack: Send and read messages in channels

GitHub: Manage code and projects

Developer Tools

Git: Work with code repositories

Sentry: Find and analyze software errors

Raygun: Track application crashes

Data Tools

PostgreSQL: Access database information

SQLite: Work with local databases

Web Tools

Brave Search: Find information online

Fetch: Get web content for AI to read

Puppeteer: Control web browsers automatically

Productivity

Google Maps: Get directions and place information

Todoist: Manage your tasks and to-do lists

Linear: Track projects and issues

Creative & Media

EverArt: Create images with AI

Sequential Thinking: Solve problems step by step

Spotify: Control music playback

Cloud & Servers

Cloudflare: Manage web services

Docker: Control software containers

Kubernetes: Manage application services

Sales Tools

Stripe: Process payments

HubSpot: Manage customer information

Knowledge Tools

Obsidian: Search through your notes

Memory: Store and find information over time

Social Media

Bluesky: Schedule posts and analyze engagement

Discord: Read and send messages in servers

9. Future Trends and Innovations in MCP Servers

- Multi-User Support: Let many people use the same server

- Better Security: Standard ways to verify who can access what

- Central Control: One place to manage all your tools

- Tool Directory: Easy way to find and add new tools

- Better Workflows: Improved handling of multi-step tasks

- Smart Tool Selection: Help choosing the right tool for each job

- Remote Servers: Better support for servers running elsewhere

- Special-Purpose Systems: Ready-made setups for specific business needs

10. Summary

MCP servers are game-changers for AI. Instead of building custom integrations for every tool, these servers let AI systems dynamically discover and use external resources on the fly.

Anthropic’s open-source protocol essentially creates a “USB-C port” for AI – standardizing how systems access databases, APIs, and files in real-time without compromising security.

While still facing authentication challenges and requiring technical know-how, MCP is rapidly gaining adoption across industries and could fundamentally transform how we build AI that interacts meaningfully with the real world.