Are you tired of paying for API calls to use powerful AI models? Concerned about your data privacy when using cloud-based AI services? Looking for a way to experiment with cutting-edge language models without breaking the bank? Ollama might be exactly what you need.

Ollama is transforming the AI landscape by putting powerful language models directly into your hands – for free. Whether you’re a developer, researcher, or just AI-curious, this open-source tool opens up incredible possibilities without the usual barriers.

1. What is Ollama? Understanding the Basics

Ollama is a free, open-source application that allows you to download, manage, and run large language models (LLMs) directly on your own computer. Think of it as your personal AI assistant that lives entirely on your machine – no cloud servers, no API fees, no data leaving your computer.

2. Key Features That Make Ollama Special

- 100% Free and Open Source: Unlike commercial AI platforms, Ollama costs nothing to use and its code is publicly available.

- Complete Local Execution: All AI processing happens on your own hardware, with no data sent to external servers.

- Enhanced Privacy and Security: Your prompts, questions, and data never leave your machine.

- Impressive Model Library: Access to dozens of powerful open-source models like Llama, Mistral, and DeepSeek.

- API Access: Built-in HTTP service makes integration with applications straightforward.

- Framework Compatibility: Works seamlessly with popular AI frameworks like LangChain and LlamaIndex.

- Offline Capability: Once models are downloaded, you can use them without an internet connection.

3. Getting Started with Ollama: Installation Guide

Setting up Ollama is remarkably straightforward, making it accessible even for those new to AI tools. Let’s walk through the process step by step:

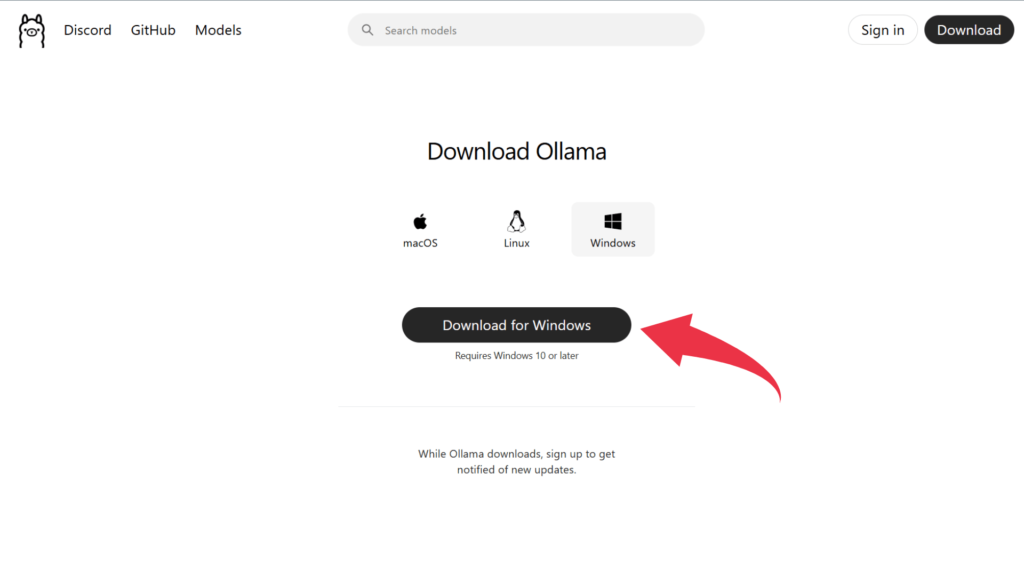

3.1 Step 1: Downloading the Right Version

- Visit the official website at ollama.com

- Click on the download button for your operating system (Windows, macOS, or Linux)

- The download size is relatively small (the application itself, not the models)

3.2 Installation Process

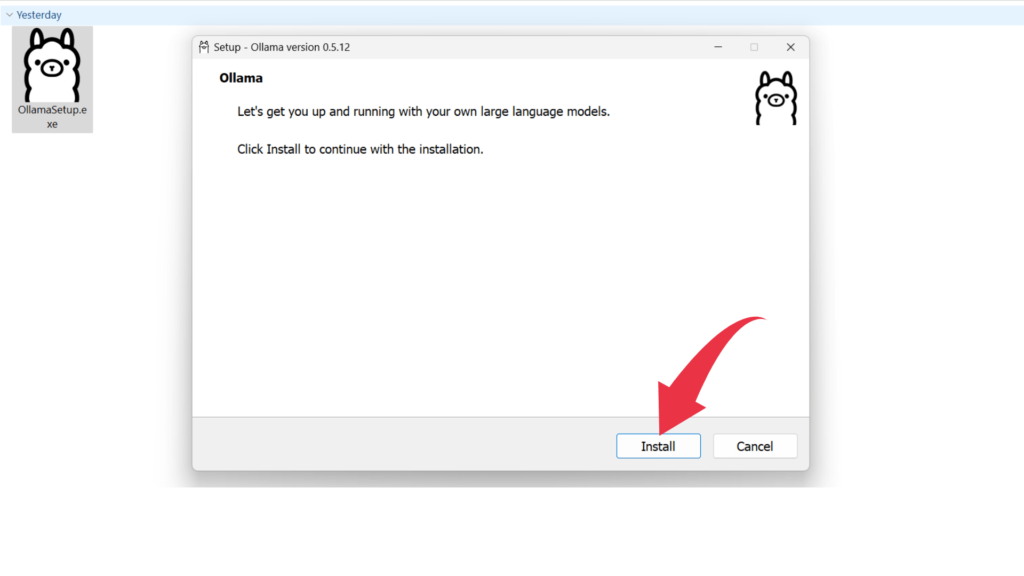

- For Windows users:

- Locate the downloaded installer file

- Double-click to launch the installer

- Follow the on-screen instructions to complete installation

- No complex configuration is required

- For macOS users:

- Open the downloaded .dmg file

- Drag the Ollama icon to your Applications folder

- Launch Ollama from your Applications folder

- For Linux users:

- Open terminal

- Run the installation command provided on the Ollama website

- The exact command may vary depending on your Linux distribution

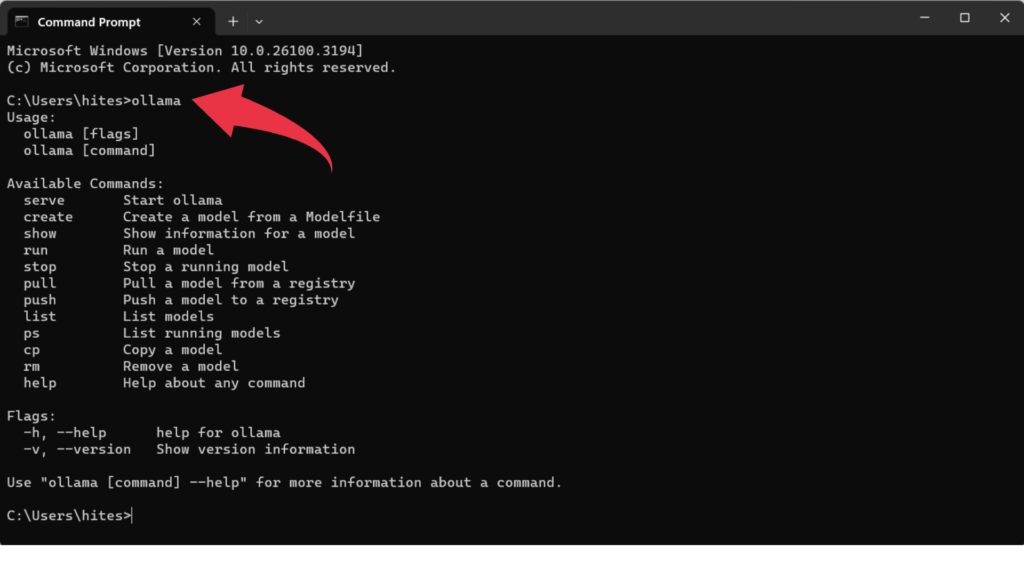

3.3 Verifying Your Installation

After installation, Ollama runs as a background service without a graphical user interface. To confirm it’s working properly:

- Open a command prompt or terminal window

- Type

ollamaand press Enter - You should see a list of available commands like

run,list,pull, and others - This indicates Ollama is installed correctly and ready to use

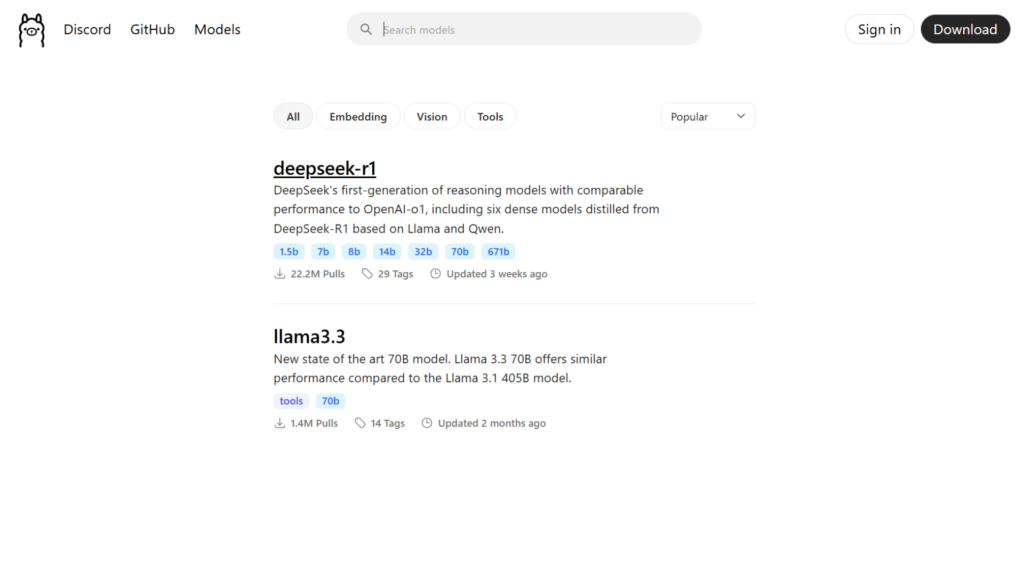

4. Exploring the Model Library: Finding the Perfect AI for Your Needs

One of Ollama’s greatest strengths is its extensive library of open-source models. Let’s explore how to browse and choose the right model for your specific requirements:

4.1 Navigating the Model Repository

- Visit ollama.com/models in your web browser

- Browse through the comprehensive collection of available models

- Models are organized by category:

- Text generation: For conversation, writing, and general-purpose use

- Vision: Models that can understand and describe images

- Embedding: Specialized for text analysis and similarity searches

- Tools: Models designed for specific applications like coding

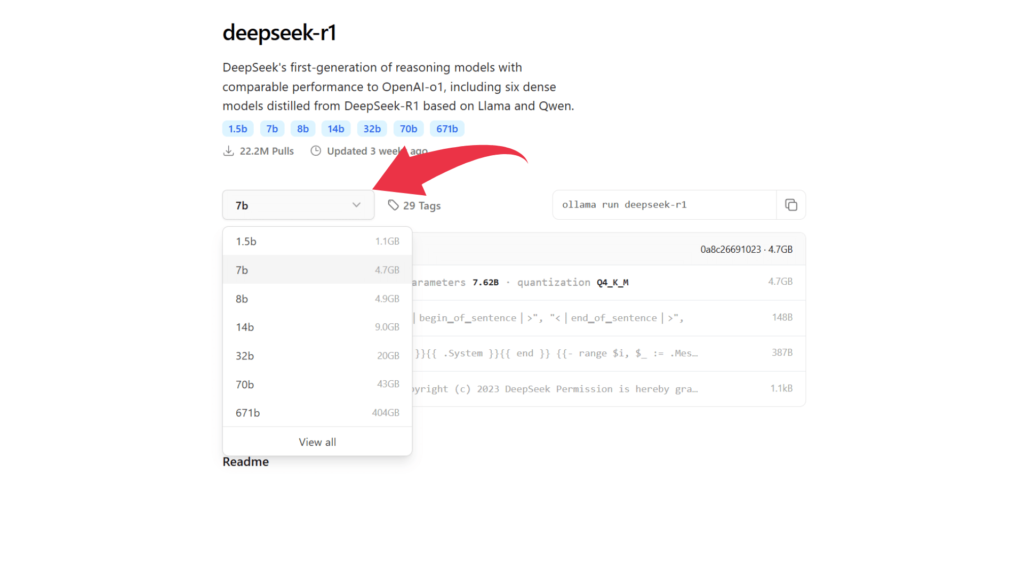

4.2 Understanding Model Sizes and Requirements

Models come in various sizes, each with different hardware requirements:

- Small models (3B-7B parameters): Run efficiently on most modern computers with at least 8GB of RAM

- Medium models (8B-13B parameters): Perform better with 16GB+ of RAM

- Large models (30B+ parameters): Require robust hardware with 32GB+ RAM and good GPU support

4.3 Recommended Models for Beginners

If you’re just starting out or have modest hardware:

- DeepSeek-R1:1.5b : Excellent for programming assistance, runs on standard hardware

- Mistral-7B: Great all-around performance with reasonable resource requirements

- Phi-4: Microsoft’s compact but powerful model that works well on laptops

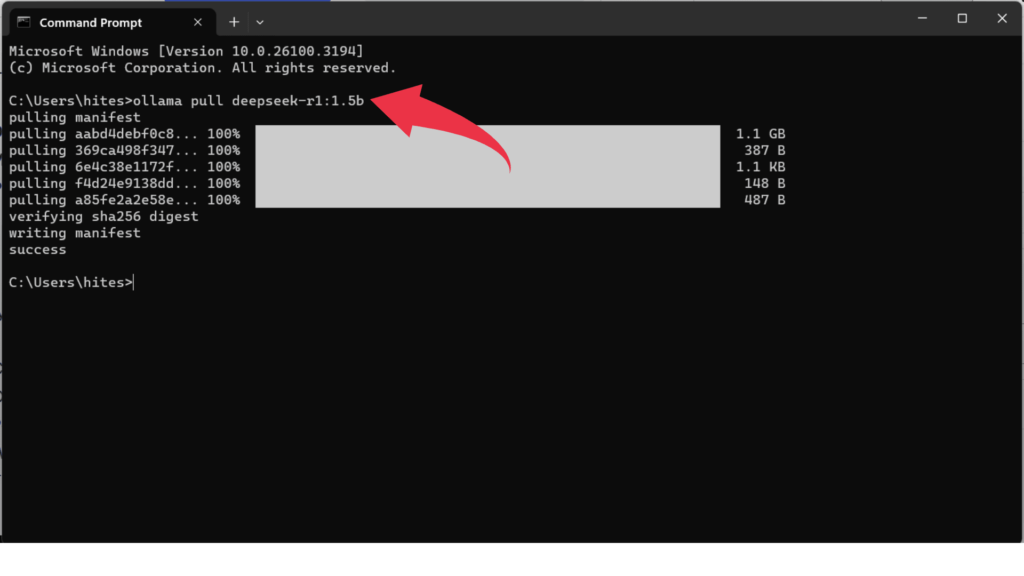

4.4 Downloading Your First Model

Once you’ve selected a model:

- Copy the download command shown on the website (e.g.,

ollama pull deepseek-r1:1.5b) - Open your terminal or command prompt

- Paste and execute the command

- Wait for the download to complete (model sizes vary from 2GB to 30GB+)

- The terminal will show download progress and notify you when it’s ready

you can read my blog on deepseek R1 : DeepSeek R1 AI: Open‑Source Model Disrupting Global Innovation

5. Using Ollama: Basic Commands and Interactions

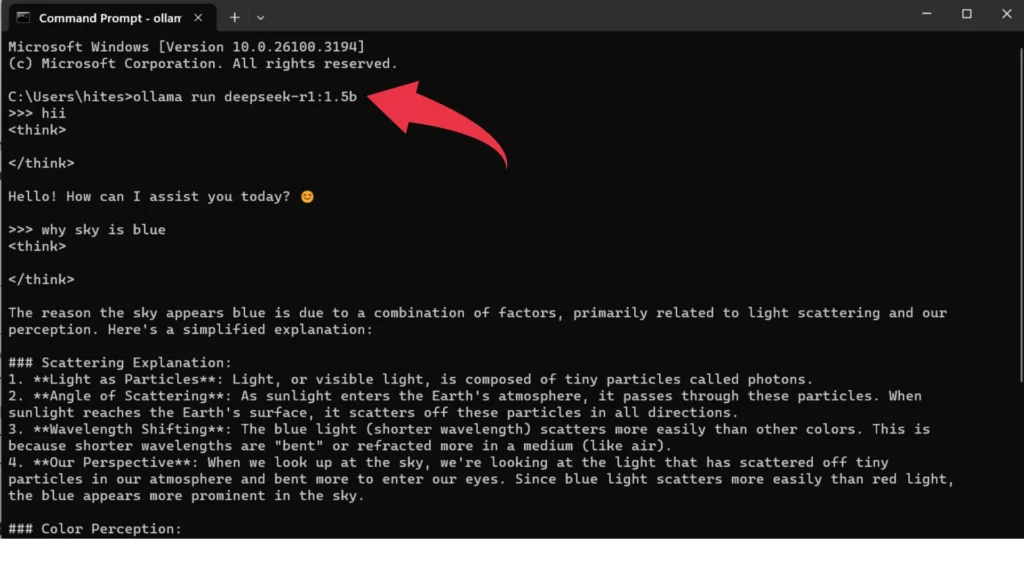

Now that you have Ollama installed and at least one model downloaded, let’s explore the essential commands for interacting with your AI models:

5.1 Starting a Conversation with a Model

To begin using a model:

- Open your terminal or command prompt

- Type

ollama run modelname(replace “modelname” with your chosen model) - For example:

ollama run deepseek-r1:1.5b - The model will initialize, and you’ll see a prompt where you can start typing

- Type your questions or prompts and press Enter to receive responses

5.2 Essential Commands for Managing Your Models

- List installed models:

ollama list - Download a new model:

ollama pull modelname - Remove a model:

ollama rm modelname - End a conversation: Type

/byeduring an active model session - Get model information:

ollama show modelname

5.3 Switching Between Different Models

Ollama makes it easy to work with multiple models:

- End your current session with

/bye - Start a new session with a different model:

ollama run anothermodelname - This allows you to compare different models’ responses to the same prompts

5.4 Example Interaction

Here’s what a typical interaction might look like:

ollama run deepseek-r1:1.5b>>> How would you explain quantum computing to a 10-year-old?

Quantum computing is like having a super-special toy that can play many games at the same time, while regular computers can only play one game at a time.

Imagine you have 10 different puzzles to solve. A regular computer would have to try each puzzle one after another. But a quantum computer is like having 10 friends who can all work on different puzzles at the same time!

These quantum computers use tiny, tiny things called "qubits" that can be like a coin that's both heads AND tails at the same time (which is pretty magical when you think about it). This special ability helps them solve really complicated problems much faster than regular computers.

>>> /bye

6. Harnessing the Power: Using Ollama’s HTTP API

While the command line interface is great for direct interactions, Ollama’s full potential is unlocked through its built-in HTTP API service. This allows you to integrate local AI models into virtually any application.

6.1 Understanding the API Basics

When Ollama is running, it automatically starts an HTTP server:

- The server runs on your local machine (localhost)

- It listens on port 11434 by default

- No additional setup is required – it’s ready to use immediately

6.2 Verifying the API Service

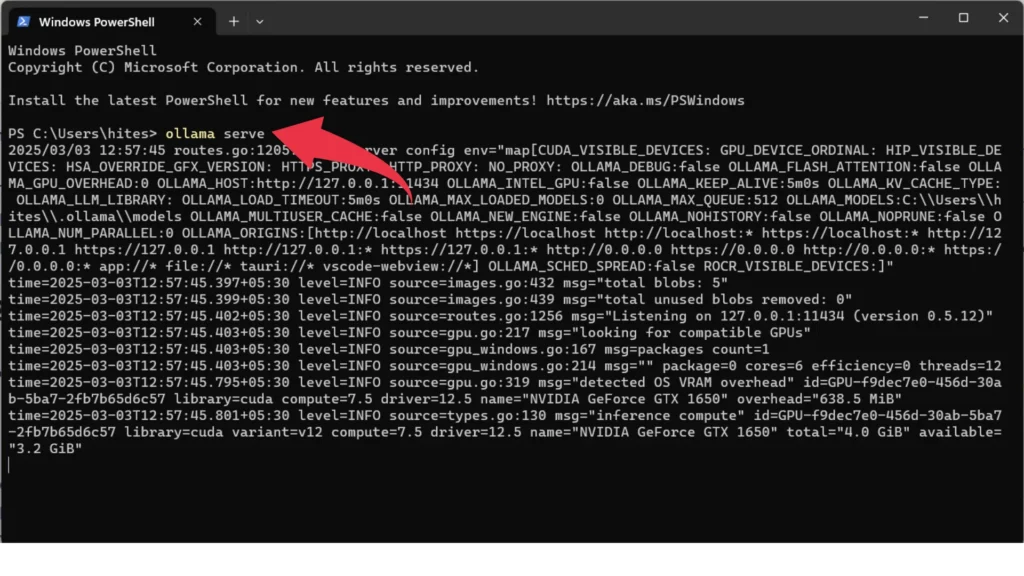

To confirm the API service is running:

- Open a terminal window

- Run

ollama serve - You should see information about the server, including which port it’s using

- You can also check your system tray for the small Ollama icon, indicating the service is active

6.3 Making API Requests

Here are several ways to interact with the API:

Using curl (Command Line)

curl -X POST http://localhost:11434/api/generate -d '{

"model": "deepseek-r1:1.5b",

"prompt": "Explain how photosynthesis works"

}'Using Python with the requests Library

import requests

response = requests.post(

"http://localhost:11434/api/generate",

json={

"model": "deepseek-r1:1.5b",

"prompt": "Write a short poem about technology"

}

)

print(response.json()["response"])Using the Official Ollama Python Library

import ollama

response = ollama.generate(

model='deepseek-r1:1.5b',

prompt='What are the main features of Python programming language?'

)

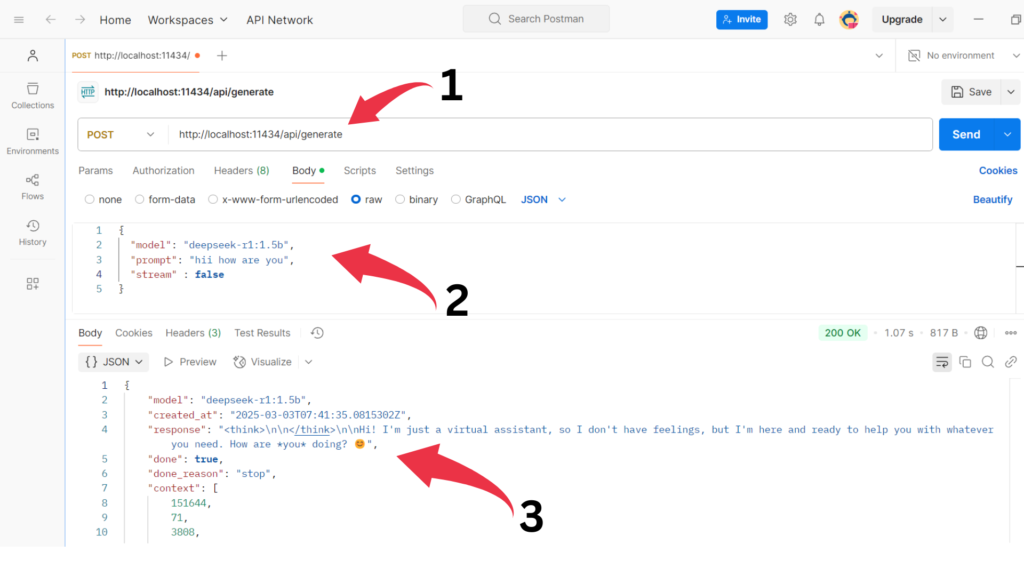

print(response['response'])Using the POSTMAN

6.4 Key API Endpoints

The Ollama API provides several endpoints for different functions:

- /api/generate: For text generation with a specified model

- /api/chat: For multi-turn conversations

- /api/embeddings: For creating vector embeddings of text

- /api/models: For listing available models

- /api/tags: For managing model tags

7. Advanced Usage: Custom Models and Fine-Tuning

For users who want to go beyond the basics, Ollama offers powerful customization options.

7.1 Creating Custom Models with Modelfiles

Modelfiles allow you to customize existing models with your own data and instructions:

- Create a text file called

Modelfile(no extension) - Define your custom model using the Modelfile syntax

- Example of a simple Modelfile:

FROM deepseek-r1:1.5b

SYSTEM You are an AI assistant specialized in medical information.- Create your custom model with:

ollama create mymedicalassistant -f ./Modelfile- Run your custom model with:

ollama run mymedicalassistant7.2 Fine-Tuning for Specialized Tasks

While full fine-tuning requires significant computational resources, Ollama supports lighter customization through:

- Parameter adjustment: Modifying temperature, top_p, and other generation parameters

- System prompts: Providing detailed instructions to guide the model’s behavior

- Context examples: Including sample exchanges that demonstrate desired responses

8. Integrating with Python AI Frameworks

Ollama works seamlessly with popular AI development frameworks:

8.1 LangChain Integration

Install ollama langchain support : pip install langchain-ollama

from langchain_ollama.llms import OllamaLLM

llm = OllamaLLM(model="deepseek-r1:1.5b

result = llm.invoke("Explain the concept of blockchain in simple terms")

print(result)8.2 LlamaIndex Integration

Install ollama llama index support : pip install llama–index–llms–ollama

from llama_index.llms.ollama import Ollama

llm = Ollama(model="deepseek-r1:1.5b", request_timeout=120.0)

response = llm.complete("Who is Paul Graham?")

print(response)9. Hardware Considerations: Getting the Best Performance

To get the most out of Ollama, it’s important to understand how hardware affects performance:

9.1 CPU vs. GPU Performance

- CPU-only: All models will run on CPU, but larger models may respond slowly

- NVIDIA GPUs: Provide significant acceleration with CUDA support

- AMD GPUs: Limited support through ROCm on certain systems

- Apple Silicon: M1/M2/M3 chips offer excellent performance for their size

9.2 Memory Requirements

As a general guideline:

- 8GB RAM: Sufficient for small models (up to 7B parameters)

- 16GB RAM: Comfortable for medium models (7B-13B parameters)

- 32GB+ RAM: Required for large models (30B+ parameters)

9.3 Storage Considerations

Models require significant disk space:

- Small models: 2-4GB each

- Medium models: 5-10GB each

- Large models: 15-40GB+ each

Plan your storage accordingly, especially if you intend to download multiple models.

10. Real-World Applications: What Can You Build with Ollama?

The possibilities with Ollama are virtually limitless. Here are some practical applications to inspire your projects:

10.1 Personal AI Assistant

Create a chatbot that runs entirely on your computer:

- Helps with writing and editing

- Answers questions without internet access

- Provides information without privacy concerns

10.2 Content Creation Tools

Develop applications for:

- Blog post generation and optimization

- Social media content creation

- Email drafting and response suggestions

10.3 Code Development Assistant

Build tools that help with:

- Code generation and completion

- Debugging assistance

- Documentation writing

10.4 Educational Applications

Create learning tools like:

- Interactive tutoring systems

- Customized explanation generators

- Study aid applications

10.5 Data Analysis Support

Implement systems for:

- Data interpretation assistance

- Report generation

- Pattern recognition in complex datasets

10.6 AI Agents

Build AI Agents :

- Use different tools to preform task

- Solve complex task with multiple agents

- Automate your workflow with a team of Agents

To learn about AI Agents check by blog : AI Agents are here: New Era of AI Use-Cases

11. Troubleshooting Common Issues

Even with Ollama’s straightforward design, you might encounter some challenges. Here’s how to address common issues:

11.1 Model Download Failures

If you encounter problems downloading models:

- Check your internet connection

- Ensure you have sufficient disk space

- Try restarting Ollama with

ollama serve - Attempt the download again with

ollama pull modelname

11.2 Performance Issues

If models are running slowly:

- Try a smaller model that better matches your hardware

- Close other resource-intensive applications

- Adjust generation parameters (lower max_tokens or temperature)

- For Windows users, ensure virtualization is enabled in BIOS

11.3 API Connection Problems

If you can’t connect to the API:

- Verify Ollama is running with

ollama serve - Confirm you’re using the correct port (usually 11434)

- Check for firewall settings that might be blocking connections

- Ensure you’re using localhost or 127.0.0.1 in your requests

12. Conclusion: Why Ollama Matters in the AI Landscape

Ollama represents a significant shift in how we interact with AI technology. By bringing powerful language models directly to personal computers, it democratizes access to advanced AI capabilities while addressing key concerns about privacy, cost, and control.

The ability to run models locally without an internet connection creates possibilities for:

- Developers working in sensitive environments

- Researchers exploring model capabilities without API costs

- Privacy-conscious users who want to keep their data local

- Students and hobbyists learning about AI on a budget

As open-source models continue to improve in quality and efficiency, tools like Ollama will only become more powerful and more relevant to our daily computing needs.

The future of Ollama looks promising, with ongoing development in areas like:

- Support for more diverse model architectures

- Improved performance optimization

- Enhanced tools for model customization

- Broader framework integration

Whether you’re just starting your AI journey or you’re an experienced developer looking for a more flexible approach to working with language models, Ollama provides a valuable addition to your toolkit.

Start exploring the possibilities today by downloading Ollama and experiencing the power of local AI for yourself.

Pingback: Gemma 3: Everything You Need to Know